Highlight

Comparing Brain Activation With Computer Vision Algorithms

Achievement/Results

Researchers at the Center for the Neural Basis of Cognition at Carnegie Mellon University in Pittsburgh have combined functional magnetic resonance imaging (fMRI) and machine learning technologies to compare five computer vision algorithms against neural activation patterns in the human visual system. The goal of the work is to understand the roles different parts of the brain play in recognizing objects, a complex task that involves multiple stages of processing distributed across many brain areas.

The study was performed by Computer Science doctoral student Daniel Leeds. Leeds was advised by Professor Michael Tarr, a psychologist who studies the human visual system, Professor Tom Mitchell, an expert on machine learning applied to fMRI, and Professor Alexei Efros, a computer vision expert.

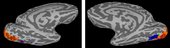

Leeds examined five biologically-inspired computer vision models: geometric blur, Gabor filterbanks, the HMAX algorithm, shock graphs, and SIFT (Scale-Invariant Feature Transform). Using information-based techniques known as searchlight and dissimilarity analysis, he looked for statistically significant links between the stimulus representations produced by the five models and levels of activity in different regions of the visual system measured by fMRI. He found that activity in early stages of the visual processing stream matched better with more simplistic (Gabor filterbank) or holistic (shock graph) representations, while mid- and high-level visual regions such as lateral occipital cortex and ventral temporal cortex were best matched by the SIFT algorithm. These findings suggest that the different visual regions correspond to different forms of visual representation in the brain.

Leeds used these results to design an algorithm to dynamically vary the visual patterns presented to subjects in an fMRI scanner in order to find optimal stimuli for activating a selected cortical region. Stimulus images are first placed in a SIFT-based feature space, using multi-dimentional scaling to preserve pair-wise distances between them. The algorithm then searches for optimal stimuli using a combination of simulated annealing and simplex search methods. This optimization can be performed in real time as the subject lies in the scanner. Responses to previous stimuli are used to guide the seach for the next stimulus to be presented. The first real time scan using this technique on a live subject was recently completed and the group is now analyzing the data.

The study was funded by NSF’s IGERT (Integrative Graduate Education and Research Training) program, which was explicitly designed to promote this sort of interdisciplinary research training. At the Center for the Neural Basis of Cognition, a joint program of Carnegie Mellon University and the University of Pittsburgh, students in 12 affiliated doctoral programs receive training in neurophysiology, systems neuroscience, cognitive neuroscience, and computer modeling. IGERT students at the CNBC receive training in a wide variety of techniques, including functional brain imaging, genetic analysis, brain-computer interfacing, and statistical analysis of neuronal data.

Address Goals

Primary: The discovery advances our understanding of visual representations in the brain, and provides an improved technique for conducting fMRI experiments to further explore the nature of the human visual system.

Secondary: The work demonstrates how training in multiple disciplines (cognitive neuroscience, machine learning, and computer vision) enables students to advance our understanding of brain function.