Highlight

What do we see in each other: How the perception of motion drives social interaction

Achievement/Results

Dr. Elizabeth B. Torres and pre-doctoral students in Computer Science and Psychology, in an interdisciplinary course supported by the National Science Foundation Integrative Graduate Education and Research Traineeship program, have developed a novel paradigm to determine which specific parameters of biological motion our perceptual system is tuned to in order to extract social information—i.e., emotional states, gender, identifying a familiar person, identifying cognitive-professional predispositions, etc.—from the movement of ourselves and others. This work, which was performed with six Rutgers University graduate students, opens the door towards studying perceptual components to the social deficits in persons with Autism Spectrum Disorders that have not yet been explored.

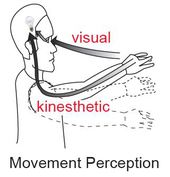

Rutgers Perceptual Science IGERT trainees Gwendolyn Johnson, Polina Yanovich, Elio M. Santos, and Nick Ross, worked with Dr. Torres to ask whether we are able to reliably recognize motions that we have produced ourselves in contrast with those produced by other people, even when our motions have been distorted with the unique features of someone else’s motions. The information derived from perceiving actions and movements underlies our social interactions, allowing us to infer the intentions of others, or to anticipate future actions from even subtle cues. As a social species, reliably extracting information about others is critical to our survival: we must be able to differentiate between friend and foe, hostile and peaceful intentions, and we must be able to make these decisions rapidly. Although related veins of research have addressed similar questions using classic techniques—such as point light displays—at a phenomenological level, a deeper explanation into how and why we are able to extract social information solely from the movements of others is sought. It was hypothesized that our physical, kinesthetic sense of our own motions aligns with our visual sense of the movement of others, which would allow for us to compare the motions we see others performed to those of our own (Figure 1). Importantly, the project described here differs from related work on this subject in that it i) uses a neutral stimuli that does not convey body proportions or other information that would otherwise betray the gender of the individual and ii) draws from the actual physical motions of participants to manipulate the perceived motion.

In order to address this question, the actual physical motions of participants performing complex motor routines (i.e., a tennis serve or a martial arts routine) were recorded with a motion caption system using electromagnetic sensors. Hundreds of trials were recorded for each participant, and the properties of the trial-to-trial random fluctuations of motion variability were characterized. These stochastic signatures can inform us about ways in which the system processes movements as a form of re-afferent sensory feedback. They can also reveal, across repetitions of the same action, how this form of kinesthetic sensory feedback is integrated with the continuous flow of the motor output. Specifically, histograms of the time to reach the maximum speed at each of the joints—an important proprioceptive parameter linked to the rhythms of the body—were constructed and fit with the Skew Normal distribution. From these distributions, noise was drawn and added to the actual motions of each subject, creating a distorted—but still natural in appearance—variants of the motion.

In this paradigm, a computer animated avatar was created to display the motions of participants in a perceptual decision making task. Several unique avatars were created for each participant, one with the actual motions of the participant preserved and the rest distorted with either the stochastic properties of their own motions or those of other participants. Participants were shown one animation at a time asked to identify, for each, whether the motion of the avatar belonged to themselves or to another participant. Both the ultimate decision and the accuracy of that decision were recorded, as well as the physical movements performed by the participant while making said decision (Figure 2). Preliminary evidence was found for an alignment between our physical motions and the decision making process when evaluating perceived motion (Figure 3). Furthermore, it was found that decision time differed significantly when the stimulus carried the motions of the participant as opposed to when the motion belonged to someone else. Participants were able to recognize their own motions at accuracy levels above chance. Future work will expand the size of the sample and diversify the motion routines performed for the purpose of creating the stimuli. The task will also be expanded to include the ability to identify familiar and unfamiliar persons. The team is currently using this paradigm with participants diagnosed with Autism in order to better understand the connection between social impairments and perceptual impairments in Autism. It is also hoped that an adaptive tool can be developed in order to help individuals with Autism to successfully interact with others through perceptual learning.

This work will be presented at the upcoming meeting of the Society for Neuroscience (Johnson, G., Yanovich, P., DiFeo, G., Yang, L., Santos, E. M., Ross, N., & Torres, E. B. (2012). Congruent map between the kinesthetic and the visual perceptions of our physical movements, even with noise. Society for Neuroscience Conference, Ernest N. Morial Convention Center, New Orleans, LA).

Address Goals

Discovery: The social information we are able to extract by perceiving biological motion has not yet been sufficiently explained. This work seeks to unveil an explanatory connection between our perception of our own motions and our ability to align that percept with our visual perception of the motions of others. Not only is this a novel discovery in understanding the intact human perceptual system in healthy individuals, but it also has the potential to explain the role of the impaired human perceptual system in persons with Autism Spectrum Disorders. This may lead to better diagnoses and treatment of Autism. Furthermore, the objective quantification of the percept that emerges from the kinesthetic sensing of our bodies in motion gave rise in this work to a power relation. This new discovery links body movements to mental processes in an unprecedented manner that will open many new questions in fields of Psychology and Cognitive Science. The framework that we have introduced to assess our questions blends in naturally with the field of Psychophysics. Since the times of Weber, Fechner, and Stevens, this field has assessed the perception of external sensory stimuli and uncovered law-like relations between our senses and our perception of the external physical world, yet the perception of our own body motions had never before been characterized by any law-like relation. Learning: This project is the result of an interdisciplinary perceptual science course supported by the NSF-IGERT. In this hands-on course, students from Psychology and Computer Science alike learned to apply methods developed in the computer science community to a classical problem in psychology. Thus the project fostered interdisciplinary collaboration and learning, preparing the next generation of scientists to embrace and contribute to interdisciplinary projects in the future.